Random Thoughts by Fabien Penso

2023

Rust’s performance is insane but requires a significant amount of manual work, unlike Rails which is highly opinionated but gives you everything.

My server-side HTML templates for Constellations used Bootstrap with a CDN but I wanted to move it to a classic asset pipeline. This post explains how I built it.

The way it works:

- All needed assets are built by your asset builder then copied in

assets - Assets are then copied to

public/assets - Assets are delivered by Actix

- An helper for templates allows to link asset files

I’d be very interested if you found this helpful or have improvement suggestions.

2022

Memory usage of Ruby vs Rust

I currently work at Beam, and more specifically on the server-side API allowing our users to synchronize their data on all their devices. For privacy reasons, this API is E2EE and we don’t see anything except encrypted blobs. The private keys are stored on the user’s device.

This API is currently in Ruby and called through GraphQL and REST endpoints. This API will potentially manage a lot of data, and it became clear Ruby wouldn’t fit the need. It’s very hard to stream response, and Ruby’s memory usage is usually too high. And as a Ruby coder since 2005, it pains me to say it.

I spent the last year reading about Rust (and Go) on evenings and weekends, and prototyping code to get a feeling of the language and what it promises to deliver. We recently decided it would be time to now work on it day-time and start replacing endpoints. It’s far from being over yet, but I already have some feedback.

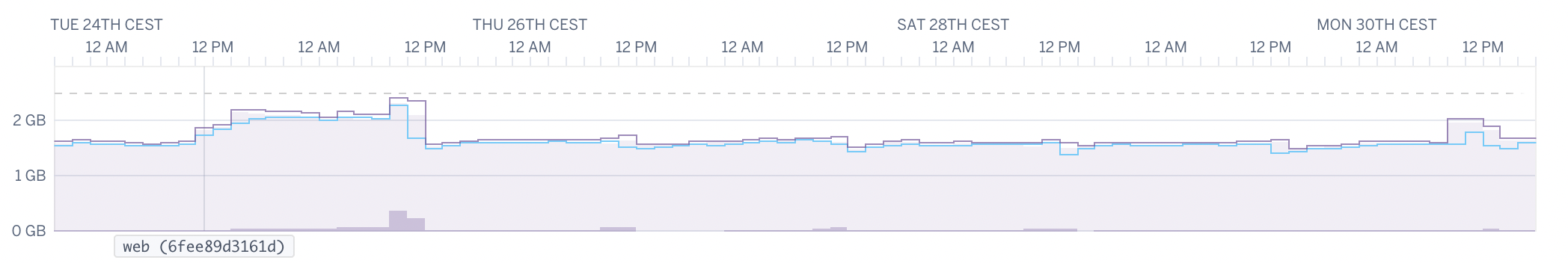

The first endpoint I’m rewriting is fetching rows from a pgsql database, and streaming results as JSON to the HTTP client. I’m testing this for over 50,000 rows and over 200MB of data. We are currently hosted on Heroku, and the Ruby instance has the following memory metrics.

It uses up to 2GB of memory, which is expected for a Rails stack.

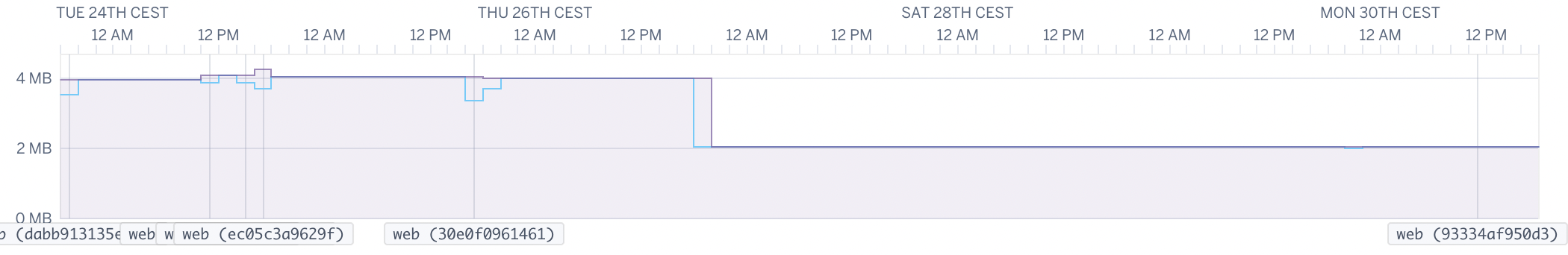

And now the Rust metrics.

The Rust endpoint has been done using actix, sqlx, serde, and a few others.

It uses up to 4MB… About 500x time less. The benchmarks I did shows 30% speed improvements as well, which to be honest was deceptive. But the Rust instance runs on a different Heroku dyno, with a 10x smaller cost per month. Moving the Rust instance to the same Ruby instance didn’t improve speed, I guess the bottleneck is on our pgsql instance.

Our Ruby stack is doing a lot more for now, but it’s still impressive nonetheless. I have the feeling Rust will become very popular among server-side micro-services.

Update on June 4th: after releasing this endpoint in production, as stated in this tweet I actually saw a x12 performance increase, while being on a 10x cheaper Heroku dyno instance. Memory stayed around 4MB as well. I suggest to read comments of this reddit post.

2021

Improve Docker performance on macOS by 20x

TL;DR: — Docker is great to manage your code, but it’s painfully slow on macOS 1. By using Virtualbox or Parallels, you can make your Rails on Docker on macOS going way faster (x20 on M1…). I ran benchmarks so you don’t have to.

For years, when working on a Rails app, I would embed a Vagrantfile in the

repository so anyone joining the project could do a vagrant up and start

coding.

Docker became more famous since, and the convenience of Docker Compose to start dependencies like a database, a Redis, or a mail server made it become a solid contender for Vagrant. I use both on macOS because of how slow Docker is.

Docker is great on Linux but painfully slow on macOS and even more on M1. How slow? And how to make it way faster? Run it on a Linux VM. I benchmarked the same code and got the following results.

-

I believe because of the way Docker does virtualization on MacOS. ↩

Publish and host your Jekyll website on IPFS

TL;DR — How I published this Jekyll website on IPFS within minutes, then spent a few more hours improving the user experience.

A few weeks back, I wanted to play with IPFS and decided to deploy my website on it. It was way easier than I anticipated, and within hours, I had a pretty good understanding of what IPFS is, how to leverage it and published this website on it.

What is IPFS?

IPFS is a decentralized system for storing and accessing files, websites, applications, and data. Contrary to Freenet where you keep others’ content on your node, IPFS will only store whatever you uploaded to it or manually decided to cache (pin in IPFS language).

Running a node is super easy. You just need to download their desktop app. You will then be able to make any files available on the network by drag&dropping them.

It seems IPFS will become one of the default storage layer of the Web 3.0, and many NFTs are hosted on it.

Swift CryptoKit and Browser

This previous article explains how to read ChaCha20-Poly encrypted data using Ruby or Python. My first goal is to ensure other languages can read data encrypted within Beam, but the end goal is to decrypt it within your browser, using client-side HTML and Javascript. Sadly, WebCrypto omits ChaCha20-Poly, and I had to move to AES-GCM instead.

This

extensive

documentation says to use additionalData for the tag part, but that never

worked on my code, and I had to do that manually.

Use the following in Xcode Playground to encrypt a string:

import UIKit

import CryptoKit

let str = "Hello, playground"

let strData = str.data(using: .utf8)!

let key = SymmetricKey(size: .bits256)

let keyString = key.withUnsafeBytes { Data($0) }.base64EncodedString()

let sealbox = try! AES.GCM.seal(strData, using: key)

print("Key: \(keyString)")

print("Combined: \(sealbox.combined!.base64EncodedString())")The output when running it on my computer (you obviously will get a different result):

Key: lQ4F/9K45Ym9K8Qv9CkVrozkTsGij7/OErhzMmhb8Ec=

Combined: NYsQV/IXJDyZgSY3hb/AQapynEBSIDXlO4TdMC+6F6DHmUBOnXEPcE/+sVrz

Swift CryptoKit and Ruby/Python

I spent days figuring out how to decrypt ChaChaPoly encrypted data with Swift CryptoKit using other languages. What should have taken me minutes took me hours. As a time savior, here is how you can decrypt it using Ruby or Python. I ended up reading the source code of swift-crypto to understand what’s the combined sealbox was doing.

Use the following in Xcode Playground to encrypt a string:

import UIKit

import CryptoKit

let str = "Hello, playground"

let strData = str.data(using: .utf8)!

let key = SymmetricKey(size: .bits256)

let keyString = key.withUnsafeBytes { Data($0) }.base64EncodedString()

do {

let sealbox = try ChaChaPoly.seal(strData, using: key)

print("Key: \(keyString)")

print("Combined: \(sealbox.combined.base64EncodedString())")

} catch { }The output when running it on my computer (you obviously will get a different result):

Key: j6tifPZTjUtGoz+1RJkO8dOMlu48MUUSlwACw/fCBw0=

Combined: OWFsadrLrBc6ak+6TiYhAI6JKvoQzVMpnRdJ6iE5vEiAhadrCu6EcEQiAs7G

You can decrypt it using Ruby with:

2020

Mac Mini M1

After Apple’s announcement, I ordered an M1 Mac Mini and canceled it when I noticed the non-upgradable RAM. I then reordered it (16G/1T), and it has just arrived today ![]()

You’ve probably seen many online reviews (I watched tons of them on youtube) and what everyone says is true. It’s fast! Pretty stable, and I can’t hear the fan even while compiling.

Looking at my geekbench you’ll see it’s the fastest machine I own, CPU wise, even more than my MBP 16” 2020. On the compute GPU level, it’s slower, but that was expected from a Mac Mini. I’m glad I got rid of the Hackintosh…

Own your email

Using @gmail.com for your email address is like living at someone’s house without rent and potentially being kicked out any day without warning. All your belongings inside, without any access.

One of my first jobs around 1997 was being a sysadmin and managing email

servers, writing sendmail.cf configuration files without M4, and I should

have known better.

Someone who used Gmail for over 10 years recently got locked out without explanation. When all services you use, tools, and all your life are connected to your @gmail.com address, you can imagine how much of a nightmare scenario this is.

Heritage is closing

I started working on Heritage in 2012 to have a place for film analog photographers to show and tell about their work. To enforce quality and consistency over the site, I put an invitation system in place, and existing members had to invite you if you wanted to upload photos. It also allowed you to have your gallery on your domain, a feature still used as of today by some of you.

Years going, other projects got me very busy, and I never invested enough energy for Heritage to take off. My last code contribution is over five years old; some libraries are outdated, not maintained, or even have known bugs. If I had to redo Heritage today, I would do it very differently. But upgrading the existing code would take too much effort, and let’s also be honest, there are now better ways to show your work than what I did.

Since then, more options became available for photographers to easily publish their work, Adobe Portfolio, Squarespace, Format, Exposure or even Medium and WordPress. Many photographers are simply using Instagram.

Moving away from Hackintosh

After Apple’s announcement, I ordered an M1 Mini only to cancel it when I noticed the 16G non-upgradable RAM. I just reordered it, and I plan to retire my seven years old Hackintosh. It served me well, but after spending the whole weekend trying to upgrade it, having issues with Clover, OpenCore, and find later than Big Sur might not run on it, I decided it was time to move on. The last nail in the coffin was when I remember I had to fix iCloud after moving to OpenCore. When using a Hackintosh, you have to find a matching working serial that Apple servers will accept. After giving a try with ten random ones without luck, I also remembered you might be locked out of Apple services for security reasons, with the only solution to call them to unlock your account. I can only imagine the explanation I’d have to give to the Apple support.

I am way more dependant on my Apple account than before, mobile apps and the AppStore, iCloud storage through many Mac apps, sync between devices, iCloud files, Keychain sync, etc. Being locked out of it would suck.

When reading this seven-year-old post, I remember I used to have a mini before because the current MacPro had not been upgraded for years. The then-new MacPro didn’t fit my need (lots of internal storage), so I moved to Hackintosh as it was way cheaper, and I thought upgradable. Since then, the only upgrade I did was upgrading the GPU (the new OS didn’t support my old GTX 760 graphic card) and adding more SSD disks.

All those steps I thought were transitional, not meaning to last long. They all lasted way longer than expected, and I feel the new M1 mini might last longer than expected as well. Looking at my geekbenchs you’ll see my Hackintosh (listed as iMac14,2) was doing 3719 Multi-core. My new 2020 MBP16” is already twice faster, but the M1 mini beats them all.

However the 2008 MacPro I bought was a great machine, still used to its maximum by a friend I sold it to a decade later. So I’m very much looking forward to the next iMac and MacPro with the new Apple chip.

So long, my dear Hackintosh.

Steve Jobs: a fascinating story

John Gruber writes:

Steve Jobs was on medical leave for the first half of 2009. When he returned in early summer, he devoted most of his attention and time to crafting and launching the original iPad, which was unveiled in April 2010. After that, he had meetings scheduled with teams throughout the company. One such meeting was about MacBooks. Big picture agenda. Where does Steve see the future of Mac portables? That sort of thing. My source for the story was someone on that team, in that meeting. The team prepared a veritable binder full of ideas large and small. They were ready to impress. Jobs comes in carrying a then-brand-new iPad and sets it down next to a MacBook the team had ready for demos. “Look at this.” He presses the home button on the iPad: it instantly wakes up. He does it again. The iPad instantly wakes up. Jobs points to the MacBook, “This doesn’t do that. I want you to make this” — he points to the MacBook — “do that” — he points to the iPad. Then he picks up the iPad and walks out of the meeting.

The story is fascinating: how Steve Jobs was focused on what matters and didn’t waste any time to be politically correct. The whole article about the M1 is interesting. As always, John Gruber is right on point about Apple moving forward.

Your computer isn't yours

Jeffrey Paul writes:

It turns out that in the current version of the macOS, the OS sends to Apple a hash (unique identifier) of each and every program you run, when you run it. (…)

This means that Apple knows when you’re at home. When you’re at work. What apps you open there, and how often.

Apple has complete control over its software, and while I trust them more than Google about my privacy, I don’t understand the purpose of knowing what app I run on my computer. The finding raised by yesterday’s issue when everyone upgraded Big Sur is disappointing.

Joe Biden elected president

Joe Biden, in an email to supporters:

“I am honored and humbled by the trust the American people have placed in me and in Vice President-elect Harris. In the face of unprecedented obstacles, a record number of Americans voted. Proving once again, that democracy beats deep in the heart of America.

With the campaign over, it’s time to put the anger and the harsh rhetoric behind us and come together as a nation.

It’s time for America to unite. And to heal. We are the United States of America. And there’s nothing we can’t do, if we do it together. I’m going to speak to the nation tonight and I’d love for you to watch.”

Biden didn’t really win, most people I talked to didn’t vote for him, they voted against Trump. 2020 election shows just how divided America remains, and Trump’s legacy will last for years. He also received more votes in 2020 than in 2016.

I expect Trump to run for re-election in 2024.

Own your content and your piece of Internet

Like most people who used the Internet for a long time, I tried and used many, many different online services. Over the years, it means I published content on websites like Flickr, Medium, Instagram, Facebook pages, Twitter, etc. The list is so long I can’t remember it all. Some are still online, some disappeared with my content, some I lost interest in.

When looking at where I published content, the biggest regret I have is when I published it outside my domain name, preventing me from building a brand over time. It’s even worse when using a tool or service not giving me the freedom to leave with an export feature, including my audience details. They say if something is free you are the product. More than being the product, you’re also investing time and money on a service that will make money on your back and your viewers. And remember when those services close, you have no way to get your content back and your only last hope is in the Wayback machine.

I am as guilty as others. I’ve been online since about 1995, involved in creating Internet projects since 1998, blogged since 2003 and I wish I had spent more time publishing on my platform instead of being lazy. For keeping in touch with readers, I should have left a newsletter option instead of adding a link to a Facebook page, including its useless vanity like button showing off with “11.8k people like this”. Facebook will then make you pay to contact them…

The newsletter box I left at the bottom of my photography website only has a few hundred subscribers, but a friend who also uses heritage for his website has close to 10k. Connecting directly with your audience matters…

A fantastic example is John Gruber who’s publishing regularly on his website for almost 2 decades. You can’t expect to have the same success and consistency, but even if you’re a very irregular writer I highly recommend you invest in buying your Internet domain, and only use tools you can easily move away from including your audience and your social graph. You have plenty of tools to choose from, I use Jekyll but you could use a hosted Wordpress as long as you only communicate on your domain.

2016

How to switch a stack without downtime

TL;DR — I joined Stuart in 2015 as a CTO, and the first big decision I had to take was moving away from the legacy codebase. How do you do that live, while growing business? This is some of the ideas we had allowing us to successfully transition from a PHP codebase to our new Ruby Stack. This post follows a talk I did at Kikk (video available) and 50Partners.

This is not a comparaison between PHP and Ruby, and I see nothing wrong in using any of those. We’re actually looking at adding other languages to our stack like Elixir, Go or more Node.js as they all have very good use cases.

Stuart is always hiring coders and you should talk to me if you’d like to be part of it. We offer competitive salaries, a good environment and allow remotes.

I am also available if you need a speaker at a conference.

Sunsetting Faast

TL;DR — I’ve decided to sunset Faast, the Push app I’ve been running for years. Expect it to stop working within weeks, probably by the end of April. I’ll switch to using Reeder myself for my RSS feeds, I didn’t find anything for push.

Faast is a push application I’ve created years ago, with its previous version Push4 back to 2009. The Appstore has changed a lot since, and you’ve probably already read many blog posts from indie developers saying they can’t make a living from it. That’s true now more than ever, and the only sustainable way to be an iOS developer full time is to freelance for other bigger brands (I’m glad I don’t have to do that, I’ve been a CTO for various projects for years).

I’ve been running Faast at my loss for years, mostly because I use it daily. The difference between Faast and most other mobile apps is it required servers, and those are very expensive to run. They gathered RSS feeds, tweets and emails for you, and sent pushes through Apple Push Notification servers. Faast had a specific case: it required high server-wise skills, and high frontend-wise skills as well. I know both sides, but it still involves way too much work and Apple makes it hard for developers. You have to spend time every year to update your app, add features and make sure new iOS releases don’t break your app.

AppStore users aren’t willing to pay for products any more, unless it includes candies and expensive in-app purchases for skipping levels. They don’t understand how difficult it is to write an application like Faast, and they complain very easily without remembering the app developer is a real Human, who they don’t need to threat. I’m happy to be out of the Apple Appstore too.

I’d like to publicly thanks K. Payandeh who designed the Application icon and helped so much about the design. I love this icon and I’m sad to see it go, it felt like part of my iPhone for so long.

2015

Which Droplet should you get at DigitalOcean?

TL;DR — If you think paying more at DigitalOcean gets you more CPU or IO, think again. The 512M instance gets you the most bang for your bucks, and is the best virtual server price/performance wise within multiple providers as long as you can manage the RAM limitation and the disk size.

I’ve recently joined Cloudscreener as the CTO. We benchmark public cloud providers, look at what CPU/RAM/IO performance you get from each instance types, and what ratio (bang for your buck) you get for each of them. We do this on 12 public providers, on multiple regions and most instance types. Our product isn’t publicly available yet, but you can get early access visiting our product page and registering for free. At the time of this blog post, this is not fully optimized and you may experience slow page loading. I suggest trying later or waiting we optimize it within the next week.

Realtime update with Highcharts, Rails and SSE

TL;DR — I started writing this article a long time ago while I was working for @mickael at ProcessOne. I never finished this article and a few things changed since but it might still help you to do realtime analytics for your service.

Just looking for the code? Check this github repository.

Monitor updates: 34UC97 LG vs P2715Q DELL

I’ve just acquired two new screens, one for work: The 34” LG 34UC97 and one for home: the 4k 27” DELL P2715Q. If you’re reading this, you’re probably interested by one of them. Here is my feedback after a few months of use on each. I kept both, for different purpose.

While reading forums about those, I’ve changed my mind and I’m glad I did. At first I was looking for a larger screen for my new office and my MacBook 13” retina, and I had already selected the DELL 4k. That laptop can drive it at 60Hz, and I thought retina would be a nice feature. But watching reviews I ended up watching this video and I changed my mind.

Retina is a nice feature, but it doesn’t change the way you work. It doesn’t improve productivity. I remember the day I switched from a 24” to a Dell 30” in 2008, and the wow effect when I put that new screen on my desk. At first I actually thought I got too big, but a few days later and I was converted. I remember gaining productivity, and a few researches tend to prove it (google for more).

Improving your Blog visibility

TL;DR — I spent years running this blog, and if you look at my page rank or search for any subject I blogged about, you’ll find out I’ve achieved a pretty good score. This is a list of tricks I did to achieve this.

Recently I moved my Blog from WordPress to Jekyll, and got the feeling to blog again. I’ve always blogged for years, but Facebook, Twitter and other social networks kind of took over for short thoughts1. And I only had short thoughts the past years.

Improving your blog visibility can be summarized in 6 steps:

- Make your website load as fast as possible

- Generate clean HTML, include meta description tags and clear title

- Integrate social networks metas for Twitter and Facebook

- Sitemaps, to help search Engines

- Microformats

- RSS Feeds and ping the oustide World once you wrote new content

Vagrant for Rails development

I used to use VMWare with a Debian guest for all my Rails development. It allowed me to have a similar configuration than on my servers, and contained my code so I could update my MacOS laptop or move my development computer without losing any time reinstalling anything except VMware.

However a coworker would have to install VMWare and his own Linux to start

coding (or use anything else of his choice). Vagrant allows you to commit your

Vagrantfile inside your git repository, making sure everyone use the same

environment and allowing your new coworker to start coding right away after a

simple vagrant up.

The following Vagrantfile for

Rails allows just that. It

sets a root password for MySQL without the need to use the ncurses interface,

installs rvm and runs bundler. If you want to reuse this you should:

2014

Switching to Hackintosh

TL;DR — I bought standard PC hardware components and I’m waiting for its delivery to install MacOSX and use this hackintosh as my daily development setup.

My currently daily computer is a a Mac mini mid-2011 I bought as I was waiting for a new MacPro. At the time no one knew Apple would release the new trash can MacPro and since I really wanted thunderbolt I didn’t want to invest into the existing MacPro who had not seen updates for a long time.

I got a Mac mini waiting for a new powerful computer, and an external raid5 FW800 disks for my photos (currently using about 3TB). That was a temporary setup, but the temporary did last 4 years. A Dell 30” screen died on the way (I fixed it myself later on) and I got the 27” Thunderbolt Display. Going from 30” to 27” was actually harder than I thought.

Faster capistrano deploys

TL;DR — I reduced deploy times from 5 minutes to less than 15 seconds by replacing the standard Capistrano deploy tasks with a simpler, Git-based workflow and avoiding slow, unnecessary work.1

I worked on this deploy recipes while working for @mickael from ProcessOne. If you enjoy challenges and look for a Ruby job, you should talk to us.

I’ve recently went from 5 minutes to about 15 seconds for my deploys. That feels much better… Spending minutes (or longer) for deploying a regular Rails application was pissing me off, I want to deploy within seconds. This great article from Code Climate convinced me I should just change the way capistrano works, and do it my own way.

What I thought would take me a few hours work ended up taking me days of full time work, and a lot of hassles. I first looked at recap, but it’s based on capistrano2, looked at mina but it’s single host based. Fabric seems nice but I didn’t feel like it either.

-

This quote is stolen from this great article from Code Climate. It’s also the article who convinced me to do the same way they did, except they used capistrano2 and I used capistrano3 ↩

Interview à France Info sur le Ladakh

J’ai été interviewé cette semaine dans l’émission de Ingrid Pohu de France Info sur mon précédent voyage au Ladakh.

Il est toujours difficile de s’entendre, j’ai l’impression de parler trop vite, de ne pas avoir assez de temps pour dire ce que j’ai en tête, et d’être trop superficiel. Mais c’est le jeu de la radio, peu de temps pour couvrir une telle région…

J’espère que ça vous donnera envie de voyager.

Videos riding Royal Enfield in Ladakh

July 2012. During my last trip with Arnaud le Canu, each of us carried a GoPro camera. Arnaud spent days (weeks?) to edit what he brought back, and the result is finally available. Since he only traveled 3 weeks with me, the videos are covering the trip from Delhi to Leh, through Kinnaur, Spiti and Lahaul.

Click videos traveling in Ladakh, on Royal Enfield to view a complete list.

I’m going to travel soon again, and since people who are following this blog are probably only interested about technical posts, I created another one focusing on my travels. You can see it here: my travel blog. The first post explains how I started traveling in India in 2006, and the one named Going from Delhi to Ladakh on Enfield tells more about my previous trip.

Packing for a 6 months trip

I traveled as a backpacker months per year the recent years, and ended up removing most of the stuff from my bag. I’m about to go to India for the 4th time in July, to ride an Enfield all the way from Delhi to Ladakh. I already went to Ladakh last year but want to do more areas, and at a slower pace. This trip might not be 6 months long, it depends of my feeling while on the road. I might come back after 3 months if I want to.

Everything in my bag found its use in previous trips, you might be interested about what goes in. I mention a lot of brands because I love them, none paid me to write this blog post and links are not affiliated. If you have any brands I should look for, please let me know.

You can view an annotated photo on flickr, and my photography from my previous trips.

15 years ago, I founded LinuxFr

Note aux Francophones: ce billet a été traduit et posté sur ce journal linuxfr. Merci à Patrick.

1998, Internet grows quicker than anyone expects. I’m 19 years old and still live at my parent’s house, hang out on IRC, use FTP to download, and run Linux for fun. I’m a complete geek.

I decide to create a website, I’m not sure what I’ll do with it. I don’t know if I want something closer to FreshMeat or Slashdot, but I buy a domain name anyway. I hang out on #Linuxfr, an IRC channel, and decide linuxfr.org would be a nice DNS. Its first whois description is “Utilisateurs débiles de Linux” which translates to something like Stupid Linux Users. We kept this for years.

The first server hosting the website is a 486DX4 100Mhz with open case, under my feet, at my employer’s office. It then later moves to a paid hosting and when the site becomes popular, we’ll never have to pay anymore1.

-

See a nice history Bruno have put together. ↩

12 gems I use in most projects

- devise: Flexible authentication, install devise-async if using sidekiq

- rspec: Behaviour Driven Development

- capistrano: Executing commands in parallel on multiple remote machines, simplify and automate deployment

- aasm: Adding finite state machines to Ruby classes. Try state_machine if you need support for multiple columns within the same model

- tabs_on_rails: Creating tabs and navigation menus

- faker: Generates fake data, very handy with factory_girl for tests

- brice: Irb goodness for the masses

- webmock: Stubbing and setting expectations on HTTP requests

- sidekiq: Efficient message processing, Resque compatible

- carrierwave: Flexible way to upload files

- will_paginate: Pagination library, I use it with will_paginate-bootstrap as I use bootstrap most the time

- whenever: Clear syntax for writing and deploying cron jobs

Which ones do you use yourself? Let me know over Twitter.

Update

Some more I got from you guys:

- better_errors: Better Errors replaces the standard Rails error page with a much better and more useful error page. It is also usable outside of Rails in any Rack app as Rack middleware.

- pry: An IRB replacement

- simple_form: Rails forms made easy.

- irbtools: Improves Ruby’s irb console. Unlike pry, you are still in your normal irb, but you have colors and lots of helpful methods. It’s designed to work out-of-the-box so there is no reason to not use it!

- nokogiri: Nokogiri (鋸) is an HTML, XML, SAX, and Reader parser. Among Nokogiri’s many features is the ability to search documents via XPath or CSS3 selectors.

- bundler: Bundler keeps ruby applications running the same code on every machine. Of course I’d use this in every single projects.

- kaminari: A Scope & Engine based, clean, powerful, customizable and sophisticated paginator for modern web app frameworks and ORMs. Seems like a nice replacement to will_paginate

- Active Admin: Framework for creating administration style interfaces.

Getting a job in San Francisco

Read this first

I wrote this post because I was receiving emails from friends about my past experience and wanted to gather all my advices in a single post.

I now receive more emails from readers than ever, at least a few per week, asking me questions about getting a job in San Francisco. I love you to blog, link, talk about this post, and if you really need to talk to me @fabienpenso is best. But while I would love to answer everyone of you over email, I just can’t.

How to repair your dead DELL 30" 3008 WFP

Short story

- Buy a single 3 euros diode (or a few…)

- Read this article to disassemble your screen

- Change the defective diode using a soldering gun

- Still not working? Buy a new power board

Long story

I bought myself a 30” DELL 3008 WFP screen in 2008 (for 1,600 euros). I was super happy at the time, and it even took me a few days to get used to so much space on my desktop. I had decided about that purchase after reading articles mentionning how faster you were with a bigger desktop, and what the heck being on a computer all day long is my job.